Cloud Governance

Products & Technology API Integration Software Development Cloud Governance Cloud governance is an approved framework that establishes, enforces, and monitors

Drive digital business and accelerate value at every stage of your integration and initiative.

Update legacy systems to newer approaches, frameworks and languages.

Services covering full spectrum including readiness assessment, migrations, disaster recovery and performance optimization.

Augment your team clusters on a short or long-term, temporary, and contract-to-hire basis.

We help organizations deliver high-quality software faster.

Improved operations and reduced budgetary expenditures through the reduction of directly-employed staff.

Streamline processes, boosting productivity and reducing manual efforts.

Cutting-edge solutions optimizing infrastructure, ensuring reliability, and enhancing performance.

Enhance efficiency, streamline processes, and drive strategic growth.

APIs are code-based instructions that enable different software components to communicate.

Innovation and scalability become a requirement for businesses to survive and thrive in this fast-changing technology world. The legacy infrastructure no longer supports the applications that end users want. Today, organizations must transform businesses with new platforms that are designed to support digital transformation initiatives and are meant for scale. This is why cloud-native technologies have become essential to enabling business success.

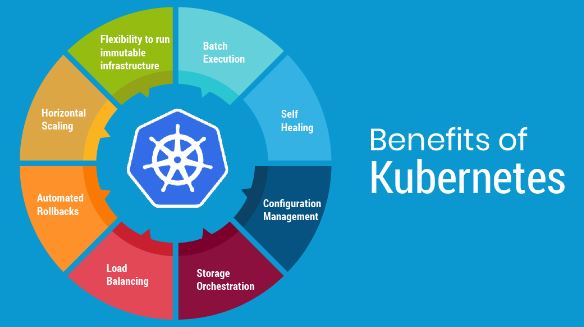

By utilizing cloud-based advanced solutions like Kubernetes, organizations can create and run innovative, portable, microservices-based applications. Kubernetes supports both stateless and stateful applications. Kubernetes are now developing into a general-purpose computing platform that serves as the fundamental building blocks of modern cloud infrastructure and applications.

Kubernetes is a container orchestration platform for scheduling and automating containerized applications’ deployment, management, and scaling. The complexity of running software applications grows when they spread across several servers and containers. To handle this complexity, Kubernetes offers an open-source API that manages where and how those containers will run. The focus is on the application workload, not the underlying infrastructure components.

Kubernetes schedules and automates container-related tasks throughout the application lifecycle, including

Deployment: Kubernetes deploy a predefined number of containers to a specified host and keep them running in the desired state.

Rollouts: A rollout is a modification to a deployment. You can start, pause, resume, or roll back rollouts with Kubernetes.

Service discovery: Kubernetes can automatically expose a container to other containers or the internet using a DNS name or IP address.

Storage provisioning: For containers, Kubernetes mount persistent local or cloud storage.

Load balancing: Kubernetes load balancing can disperse the burden across the network based on CPU utilization or custom metrics to maintain performance and stability.

Autoscaling: Kubernetes can launch additional clusters to manage the increased workload when traffic grows.

Self-healing for high availability: Kubernetes can automatically restart or replace a down container to avoid downtime. Additionally, it can remove any containers that don’t adhere to health-check standards

Kubernetes clusters enable the quick and easy migration of containerized applications from on-premises infrastructure to hybrid deployments across any cloud provider’s public cloud or private cloud infrastructure without compromising the app’s features or performance.

Containerization provides lightweights, a more agile way to handle virtualization than with virtual machines. Kubernetes is adaptable enough to manage containers on various infrastructures, including public clouds, private clouds, and on-premises servers.

Additionally, it works with any container runtime. Kubernetes gives the liability to grow without needing to rearchitect your infrastructure.

Container deployment across multiple compute nodes, whether on the public cloud, onsite VMs, or physical on-premises machines, are scheduled and automated by Kubernetes. The platform not only enables easy scaling horizontally and vertically, but it also enables easy scaling of infrastructure resources up and down to meet demand faster.

The capability of Kubernetes to roll back an application change if something goes wrong is an additional advantage.

Kubernetes support containerized applications’ reliability. It automatically puts and balances containerized workloads to adequately maintain the system’s operational and scale clusters to meet growing demand. In a multi-node group, if one node fails, the workload is dispersed across the remaining nodes without affecting user availability.

On one or more public cloud services, Kubernetes can be configured to maintain a very high uptime for even high-availability workloads. One notable use case is Amazon, which switched from a monolithic to a microservices design using Kubernetes.

Kubernetes automatically provisions containers into nodes for the best utilization of resources. Using Kubernetes’ container orchestration, the workflow becomes more efficient, requiring fewer servers and less laborious, ineffective administration. Kubernetes automatically configures and fits containers onto nodes for the most efficient use of resources.

Kubernetes should be implemented as early as the development lifecycle so that developers may test their code and avoid costly mistakes later on.

Applications built using a microservices architecture are made up of discrete functional units that interact with one another via APIs.

This allows IT, teams to work more effectively and enables development teams to have smaller groups that each concentrate on a particular feature. Namespaces offer access control within a cluster for increased efficiency. Namespaces are a technique to set up numerous virtual sub-clusters within the same physical Kubernetes cluster.

A modular, VMwareTanzu application-aware platform enables developers to build and deploy software fast and securely on any compliant public cloud or on-premises Kubernetes cluster.

Business depends on consistently delivering and optimizing contemporary software to give their customers the finest experiences and a competitive edge on any cloud. Cloud-native applications and apps modernized for the cloud environment are included in this context. This increase the complexity.

VMware Tanzu reduces this complexity. It is a modular platform for cloud-native applications, making it simpler to create, publish, and run in a multi-cloud environment, allowing innovation to take place as you see fit.

Your developers can be at their most creative and productive with VMware Tanzu. Streamline and secure the path to production for continuous value delivery. And deploy highly efficient and scalable applications that satisfy your users and help your company grow.

We deploy secure, highly available clusters in native Kubernetes and provide scalability, workload diversity, and continuous availability.

We guarantee protected infrastructure, ensure compliance, and Isolate computing.

We can help you implement Kubernetes’s best practices to enhance your CI/CD pipelines and DevSecOps processes.

Products & Technology API Integration Software Development Cloud Governance Cloud governance is an approved framework that establishes, enforces, and monitors

DevSecOps integrates security at every stage of SDLC, from initial design through integration, testing, deployment, and software delivery. The goal is to deliver robust and secure software applications.